How Do I: Develop an Extension in Business Central On Premises?

{Solved} Call Web API from within a plugin in Dynamics 365 online

PowerDataQ | Data Cleansing Service for Microsoft Dynamics 365

For modern companies it is important to have data consistency validation systems, and data enrichment services that implement a strategic approach to ensure greater analytical productivity. Having a large database is not always synonymous with a company resource, if it contains inaccurate data or information that is fragmented or inaccurate.

For this reason, we recommend our PowerDataQ service for all our customers, because it not only optimizes databases, but also generates value for their marketing and sales teams.

Our Data Cleansing solution helps to format, classify, modify, replace, organize, and correct the information collected from various data sources, in order to create a unique and homogeneous database.

MB2-877 Microsoft Dynamics 365 for Field Service Module 1 Topic – Perform initial configuration steps

Sample Code to retrieve instances using Online Management API in Dynamics 365 Customer Engagement

A quick look at download Retail distribution jobs (CDX)

Commerce Data Exchange (CDX) is a system that transfers data between the Dynamics 365 F&O headquarters database based and retail channels databases(RSSU/Offline database). The retail channels databases can the cloud based “default” channel database, the RSSU database and offline databases that is on the MPOS devices. If the look at the following figure from Microsoft docs, this blog post is explaining how to practically understand this.

What data is sent to the channel/offline databases?

In the retail menus you will find 2 menu items; Scheduler

jobs and scheduler subjobs. Here the different data that can be sent is defined.

When setting up Dynamics 365 the first time, Microsoft have defined a set to ready to use scheduler jobs that get’s automatically created by the “initialize” menu item, as described here.

Scheduler jobs is a collection of the tables that should be sent, and sub jobs contains the actual mapping between D365 F&O and channel database fields. As seen in the next picture, the fields on the table CustTable in D365 is mapped towards the AX.CUSTTABLE in the channel database.

To explore what is/can be transferred, then explore the Scheduler jobs and scheduler subjobs.

Can I see what data is actually sent to the channel/offline databases?

Yes you can! In the retail menu, you should be able to find a Commerce Data Exchange, and a menu item named “Download sessions”.

Here you should see all data that is sent to the channel databases, and here there are a menu item names “Download file”.

This will download a Zip file, that contains CSV files, that corresponds to the Scheduler

jobs and scheduler subjobs.

You can open this file in Excel to see the actual contents. (I have a few hidden columns and formatted the excel sheet to look better). So this means you can see the actual data being sent to the RSSU/Offline channel database.

All distribution jobs can be set up as batch jobs with different execution reoccurrence. If you want to make it simple but execute download distribution job 9999 to run every 30 minutes. If you have a more complex setup and need to better control when data is sent, then make separate distribution batch-jobs so that you can send new data to the channel databases in periods when there are less loads in the retail channels.

Too much data is sent to the channel databases/offline database and the MPOS is slow?

Retail is using change tracking, and this makes sure that only new and updated records is sent. This makes sure that amount of data is minimized. There is an important parameter, that controls how often a FULL distribution should be executed. By default it is 2 days. If you have lots of products and customers, we see that this generates very large distribution jobs with millions of records that will be distributed. By setting this to Zero, this will not happen. Very large distributions can cripple your POS’es, and your users will complain that the system is slow, or they get strange database errors. In version 8.1.3 it is expected to be changed to default to zero, meaning that full datasets will not be distributed automatically.

Change tracking seams not to be working?

As you may know, Dynamics 365 have also added the possibility to add change tracking on data entities when using BOYD. I have experienced that adjusting this affect the retail requirement for change tracking. If this happens, please use the Initialize retail scheduler to set this right again.

Missing upload transactions from your channel databases?

In some rare cases it have been experienced that there are missing transactions in D365, compared to what the POS is showing. The trick to resent all transactions is the following:

Run script: “delete crt.TableReplicationLog” in the RSSU DB. And the next P job will sync all transactions from RSSU DB (include missing ones).

NAV TechDays 2018 – Evolution of a titan: a look at the development of NAV from an MVP angle

In 3 days, it’s game-time again. THE best DEV conference for Business Central will take place in Antwerp: NAV TechDays– and I’m honoured to be a part of it again. But this year – it’s quite different, because I signed up for a challenge. This year, I’ll be joining Waldo on stage for a very special session:

In 3 days, it’s game-time again. THE best DEV conference for Business Central will take place in Antwerp: NAV TechDays– and I’m honoured to be a part of it again. But this year – it’s quite different, because I signed up for a challenge. This year, I’ll be joining Waldo on stage for a very special session:

Evolution of a titan: a look at the development of NAV from an MVP angle

If you think this would be a boring look back at the stuff from the past that you can read about on wikipedia – you would be badly mistaken. Here is the link to wikipedia, because you won’t get that from our session.

We are not there to talk about the past. We are there to talk about what challenges – no, opportunities – are ahead, and how we as developers can act upon these challenges. We will talk about (what we think as) the most important parts of the technology stack that has touch points with NAV (sorry, “Business Central”), and what impact it has on the future perspective.

And – Waldo wouldn’t do a session without killer demos (that’s why he asked me to join .. ). Prepare yourself for no less then 45 minutes of no-nonsense-cutting-edge-demos that will blow your socks off (bring an extra pair, just in case!).

I don’t know about you, but I’m very excited to do this session together with Waldo – if you have seen any of his sessions, you know it will be totally worth your time!

See you there on Thursday at 4pm!

Read this post at its original location at http://vjeko.com/nav-techdays-2018-evolution-of-a-titan-a-look-at-the-development-of-nav-from-an-mvp-angle/, or visit the original blog at http://vjeko.com. 5e33c5f6cb90c441bd1f23d5b9eeca34

The post NAV TechDays 2018 – Evolution of a titan: a look at the development of NAV from an MVP angle appeared first on Vjeko.com.

NAVTechDays 2018 – Evolution of a titan: a look at the development of NAV from an MVP angle

In 3 days, it’s game-time again. THE best DEV conference for Business Central will take place in Antwerp: NAVTechDays– and I’m honoured to be a part of it again. But this year – it’s quite different, because I signed up for a challenge. This year, I’ll be joining Vjeko on stage for a very special session:

Evolution of a titan: a look at the development of NAV from an MVP angle

If you think this would be a boring look back at the stuff from the past that you can read about on wikipedia – you would be badly mistaken. Here is the link to wikipedia, because you won’t get that from our session.

We are not there to talk about the past. We are there to talk about what challenges – no, opportunities – are ahead, and how we as developers can act upon these challenges. We will talk about (what we think as) the most important parts of the technology stack that has touch points with NAV (sorry, “Business Central”), and what impact it has on the future perspective.

And – Vjeko wouldn’t do a session without killer demos (that’s why he asked me to join .. ;-)). Prepare yourself for no less then 45 minutes of no-nonsense-cutting-edge-demos that will blow your socks off (bring an extra pair, just in case!).

I don’t know about you, but I’m very excited to do this session together with Vjeko – if you have seen any of his sessions, you know it will be totally worth your time!

See you there on Thursday at 4pm!

Dynamics 365 Business Central – On Premise, what’s new #2

Flow to Synch Dynamics 365 with an On Premise Database

How Do I: Create a Dynamics 365 Business Central Demo/Sandbox Environment?

Different Types of CRM Portals

D365F&O Retail: Combining important retail statement batch jobs

The Retail statement functionality in D365F&O is the process that puts everything together and makes sure transactions from POS flows into D365F&O HQ. Microsoft have made some improvements to the statement functionality that you can read here : https://docs.microsoft.com/en-us/dynamics365/unified-operations/retail/statement-posting-eod. I wanted to show how to combine these 3 processes into a single batch job.

The following drawing is an oversimplification of the process, but here the process starts with the opening of a shift in the POS (with start amount declaration), and then start selling in POS. Each time the job P-0001 upload channel transaction is executed, the transactions are fetched from the channel databases, and imported to D365F&O. If you are using shift-based statements, a statement will be calculated when the shift is closed. Using shift-based closing can be tricky, but I highly recommend doing this! After the statement is calculated and there are no issues, the statement will be posted, and an invoiced sales order is created. Then you have all your inventory and financial transactions in place.

What I often do see, is that customers are using 3 separate batch jobs for this. The results in the user experience that the retail statement form contains many calculated statements waiting for statement posting. Some customers say they only want to see statements where there are issues (like cash differences after shift is closed).

By combining the batch jobs into a sequenced batch job, then the calculated statements will be posted right again, instead of waiting until the post statement batch job is executed. Here is how to set this up:

1. Manually create a new “blank” batch job

2. Click on “View Tasks”.

3. Add the following 4 classes:

RetailCDXScheduleRunner– Upload channel transaction (also called P-job)

RetailTransactionSalesTransMark_Multi– Post inventory

RetailEodStatementCalculateBatchScheduler– Calculate statement

RetailEodStatementPostBatchScheduler– Post statement

Here I choose to include upload of transactions, post inventory, calculate statement and post statement into a single batch-job.

Also remember to ignore task failures.

And remember to click on the “parameters” to set the parameters on each task, like what organization notes that should be included.

On each batch task I also add conditions, so that the previous step needs to be completed before the batch-job starts on the next.

Then I have 1 single batch job, and when executing it spawns subsequent tasks nicely.

The benefit of this is that when you are opening the statements workspace you mostly see statements where there are cash differences, or where the issues on master data.

Take case and post your retail statements.

MB6-898 Use the Employee self-service workspace to manage personal information

Tip #1195: Your D365 V9 On Premise Questions Answered

We had a chance to check out Dynamics 365 v9 On Premise, and in the process answered some of our lingering questions.

Does v9 On Premise have Unified Interface?

Yes — Unified Interface is included in V9OP. You can create new model driven apps and select between web and classic UI. Note it is the 9.0.2 version of Unified Interface — some of the goodies like Advanced Find that are available in Unified Interface online are not available in V9OP. Also, configuration of the Outlook App Module is not available in V9OP.

What about mobile?

Since Unified Interface is available in V9OP, the mobile app experience will reflect the Unified Interface. Like with V9 Online, the Mobile Express forms are also gone.

Virtual entities

Virtual entities are supported in V9OP.

Got other questions about Dynamics 365 v9 On Premise? Send them to us at jar@crmtipoftheday.com.

(Facebook and Twitter coverphoto by Dayne Topkin on Unsplash )

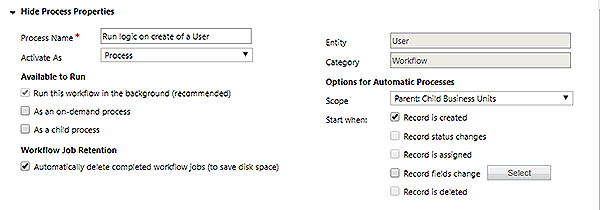

Run Workflows on Create of a User in Dynamics 365

As mentioned in a previous blog, plugins are not triggered on create of a user in CRM 365 online. In that blog, I talked about how you can trigger them on associate of a user to a security role. In this blog, I will talk about another alternative.

When you create a user in CRM online, plugins are not triggered, BUT workflows registered on create of a user are! By registering a workflow on create of a user we can either manage our plugin logic through workflow configuration making it easier to create and deploy small changes to logic without much disruption to your users. Alternatively, you could write a custom CodeActivity to handle more complex logic and run this in your workflow. Running your logic in a workflow will also work if you want to trigger workflows on update of user.

Unfortunately, you cannot run workflows on status change of a user nor on update of the status field. If you have logic that should be run when a user is disabled you will have to trigger this manually.

I hope this will help you to get around issues you have in running plugins on create or update of a user in Dynamics 365.

Accelerate your Dynamics 365 for Finance & Operations Upgrade

Developer Preview - November 2018

Getting logged in user roles in client side in Dynamics V9.0

Upgrading to Jet 2019: Install Jet Analytics

This post is part of the series on Upgrading to Jet 2019.

This post is part of the series on Upgrading to Jet 2019.

Before installing the new version of Jet Analytics, it is worth noting that Jet recommend leaving the old version installed while installing the new. There is no reason given for this, but I presume it is tio allow for easy rollback in the event of problems.

To install Jet Analytics, download the Jet Analytics software:

Once you have downloaded the zip file, extract all files and, from the 1 – Jet Analytics – REQUIRED folder, run the required Setup.exe:

On the Welcome screen, click Next to start:

Accept the terms of the End-User License Agreement and click Next:

Leave the setup type set to Typical and click Next:

Click Install to begin the installation:

Once the installation is finished, click Finish:

The next step, covered in the next post, is to configure the new version of the Jet Data Manager Server.

Click to show/hide the Upgrading to Jet 2019 Series Index

| Upgrading to Jet 2019 |

|---|

| What's New In Jet Analytics 2019 |

| Install Jet Analytics |

Read original post Upgrading to Jet 2019: Install Jet Analytics at azurecurve|Ramblings of a Dynamics GP Consultant